Leaderboard

Popular Content

Showing content with the highest reputation on 12/30/24 in all areas

-

I believe he meant as a boot drive not as the storage drives 🙂 If so, a HDD as a boot drive shouldn't be an issue, at least not with Truenas itself, but I don't believe that Hexos adds much overhead which would require an SSD to work properly. The NVME/M.2 SSD is often recommended because it frees up 1 (or in case of a mirror 2) SATA port, which there are not many left on modern consumer grade HW and because they aren't that expensive anymore and consum less energy. The boot might take longer, but most of the OS runs in Memory anyway. So if you are fine with longer boot time, decent HDDs should be fine.1 point

-

i plan to do a write up once I get home and swap over hardware to the new case1 point

-

Wow! That quick reply escalated rather fast...My experience is just n=1 and should not be applied in general ML/HPC considerations. @PsychoWardsUhm...just follow your original idea as you already put some thought in it...nothing wrong with your initial approach 😉1 point

-

Ah, thanks for the extra info and now I think I get where you're coming from! You did do some research! 🙌 I am not sure if you're game for some tinkering, but P40 has better FP performance due to Quadro drivers and you might find a better deal if you switch to a hypervisor and unlock the potential of the vGPU capabilities with patched drivers on a consumer card. (Mentioned Tesla P40 has GP102 die with native vGPU driver support.) For reference: one machine hosts PROXMOX hypervisor on i5-12500T, 128GB RAM and GTX1080 Ti (also GP102). Main reason for using GTX1080 Ti is exactly because of the similarity with Tesla P40, albeit with only 11GB vs 24GB for P40! As long as your ML jobs or models don't exceed 11GB, you won't experience severe penalties for it, if any. The GPU IDs as a P40, assigned within PROXMOX to a VM, where I specified several mdevs for different use cases in different VMs.. My host also serves my HexOS testVM (containing Immich), dedicated Plex (iGPU passthrough), Home Assistant OS, docker/kubernetes and many more. Since you CPU is (much) more powerful, I expect much better results/performance in comparison to my machine. Only buy Tesla P10/P40/T10/T40/Quadro P6000/RTX6000/RTX8000 if you can get a sweet deal on it or really in need of 24GB, otherwise 1080Ti 11GB, Titan X Pascal (both GP102), 2070 Super (TU104 version!), 2080 non-TI/Super (TU104) or even 2080 Ti (TU102). Here in the Netherlands a used 1080 Ti is about € 175-200,- and 2080 Ti about € 300-400,- But still: only buy extra GPU if you need it. My 12500T has much less performance, and still my Immich app in HexOS processes al my pictures just fine on CPU alone. Only time when HexOS feels 'laggy', is when I haven't synched my pictures for a while and the bulk upload strains my wireless network. The dedicated GPU became "free" after my upgrade to AMD RX 6950XT, and just haven't found a need to assign a mdev to a VM yet. (All of the above only applies to your/my mentioned use case, of course. I have triples of Nvidia Tesla M40 24GB and AMD MI25 16GB for jobs/applicatons that do need more or benefit from more VRAM.)1 point

-

I currently use Plex. I put my first Plex machine up in 2010 and got on board why back then. Because of that my monthly payment is really cheap has never changed and I get all features. So for me I am staying there. I have tried Jellyfin but it has never worked as seamless for me as Plex. I have the client on my phone and Ipad and it just work anywhere i am at. The last cruise me and the wife went on I had free WiFi on the boat and at night we watch movies from my server while at sea.1 point

-

Proxmox is built for the sole purpose of running vms efficiently. So it'll likely run smoother using less resources than hexos But i don't use it myself so this this all hearsay I'm also not familiar enough with it to tell you if your cpu is strong enough for certain but my gut feeling is that ubuntu +Windows at the same time might be tough for your cpu since it's a quad core that's over a decade old.1 point

-

Damn. I mean...you gotta know when to hold'em and fold'em. But that doesn't mean you can't get a nice 7.1 wherever you end up post retirement, right?1 point

-

1 point

-

(I don't dabble in AI, but I have a couple of clusters running model rendering and CAM/CFD simulation jobs) If current setup fulfils your need, no need for extra HW or peripheral for offloading. Without knowing your current setup, I think you will have to run against some issues before needing more processing power. Intel, AMD and Nvidia current gen can all process some sort of AI/HPC offloading. Once you do expect a bottleneck, you'll probably have a much better idea what process needs more offloading/acceleration. It really depends on your use case(s). You can then lookup what you need. If you really want to buy something now and price is of no concern: You can't go wrong with CPU processing power (higher core/thread count), RAM and any current gen GPU. Intel Arc B-series, AMD RX 7000 and Nvidia RTX 4000 have acceleration and offloading capabilities (depending on your field or software requirements) and should be mentioned in any software usage guide lines. Nvidia has better support in general, so an RTX (or RTX Quadro) will always be useful. Usually more cores (TMU/ROP), more VRAM is better. On the other hand: buying (expensive) equipment now, really doesn't save money in the future or even yield better results, if any. If you don't need it now, you won't need it in the near future, and on the horizon there may be better options available. It depends mostly on the software support and requirements, I guess. Just beware: It (again) really makes no sense buying a RTX 3080 (for example) if you have no use for it now, because your application or software stack might not be optimal. Current lightweight applications rarely diverge into resource hogs... Plex/ffmpeg seems a good example: Intel iGPU. up to current gen already outperforms any dedicated AMD/Nvidia GPU in realtime transcoding quality, and even when AI optimisation/processing comes into the picture, you can still offload the processing (via docker application container) to a remote processing node (wether CPU or GPU supported). I don't see a future path, where these applications by themselves would support/warrant realtime image enhancements via AI. Or in the case of Immich: both CPU and GPU (Nvidia) load balancing is supported, you are most likely already to be using your current setup without bottlenecks. I find It unlikely that you would put a better CPU/GPU in your Immich server, just to "future proof" your current server machine. You are more likely to just put your current GPU in the server, and have it do it's thing in the background. You can then treat yourself to a shiny new GPU for gaming, and use that if you. need to help Immich a bit, but for the most part Immich can do it's thing on CPU just fine. Maybe your Immich example is just the wrong example, but my advice, in case that was not expected 😉 is to just wait until you have a clear target. Machine learning is already ubiquitous, long before AI and machine learning became trendy to the general public. You are not missing out on anything, because you are already on the train and unless you are unhappy with your seat, there is no need to upgrade your seat ticket.1 point

-

I use jellyfin since its not bloated like plex and is free. It also gives me the freedom to use most every file format. I do stream content to apple tvs i have throughout the home using the infuse app (i think it was like $99 for a lifetime sub). I don't think i had to transcode at all since i setup infuse on the apple tv. I had a family member that used to watch movies streamed to his ipad using the same infuse license. On my android devices i don't even need a third party app since the jellyfin app works fantastic. The flexibility is just convenient to me since i don't always want to watch on my tv.1 point

-

im hoping that there is either some warning or there will be black friday sales going forward but no way to tell now when the full release will come out. theoretically figuring out truenas shouldnt be needed at all when hexos enters full release.1 point

-

I have a feeling we'll see a lot more systems turn on after the holidays. The growth rate is actually pretty perfect for us right now. Enough to get a good sample size and yet not too much to overwhelm our ability to manage and respond to issues. I also think there is just a massive undercurrent of users that bought HexOS just to get in at the good price, with no real intention of deploying until we reach or get closer to 1.0.1 point

-

My assumption would be theres a lot of folks like me who bought 2 or more licences to have offsite backups ect. So the install counts will be low until V1 when it's stable enough to run mission critical services.1 point

-

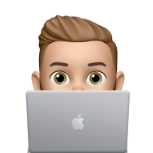

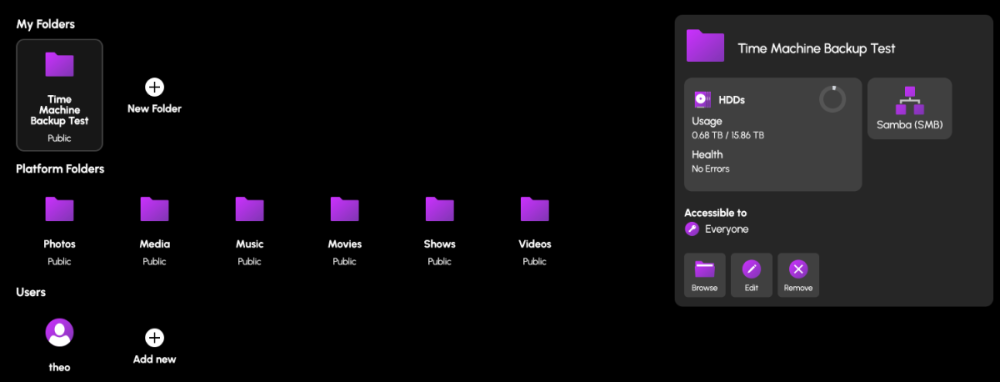

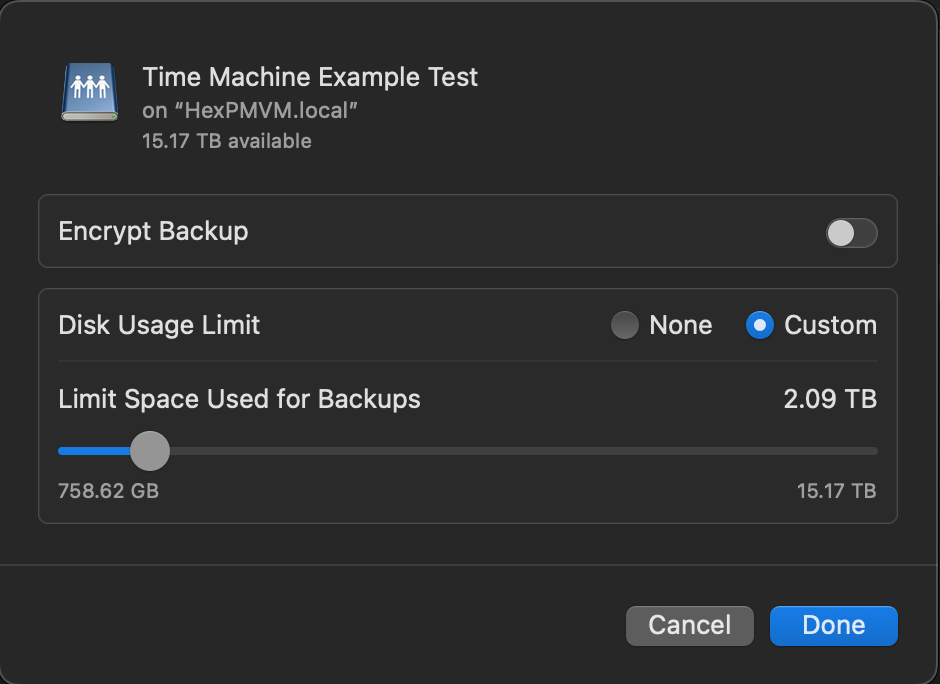

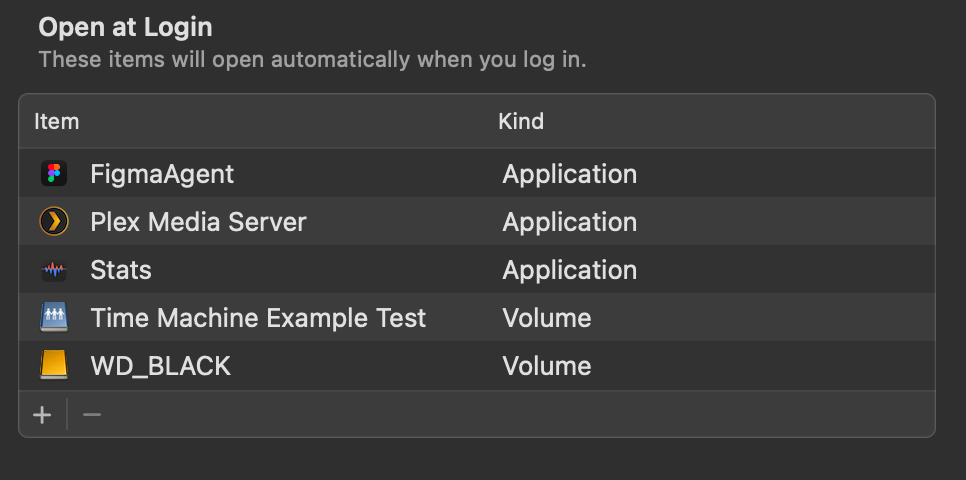

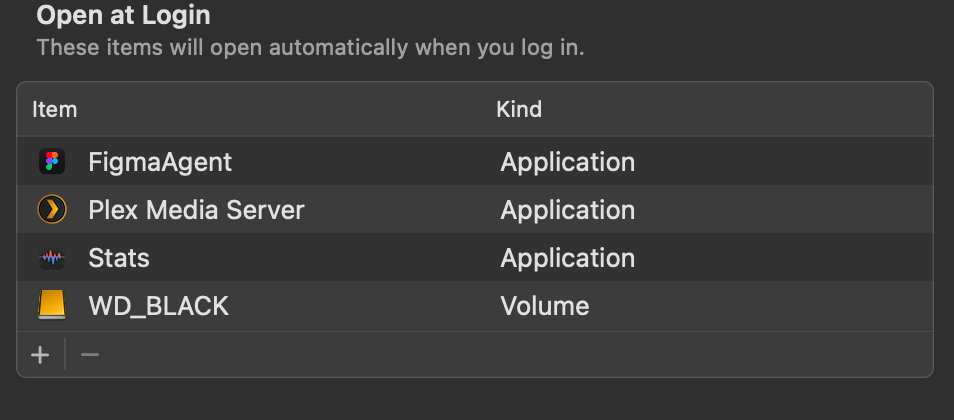

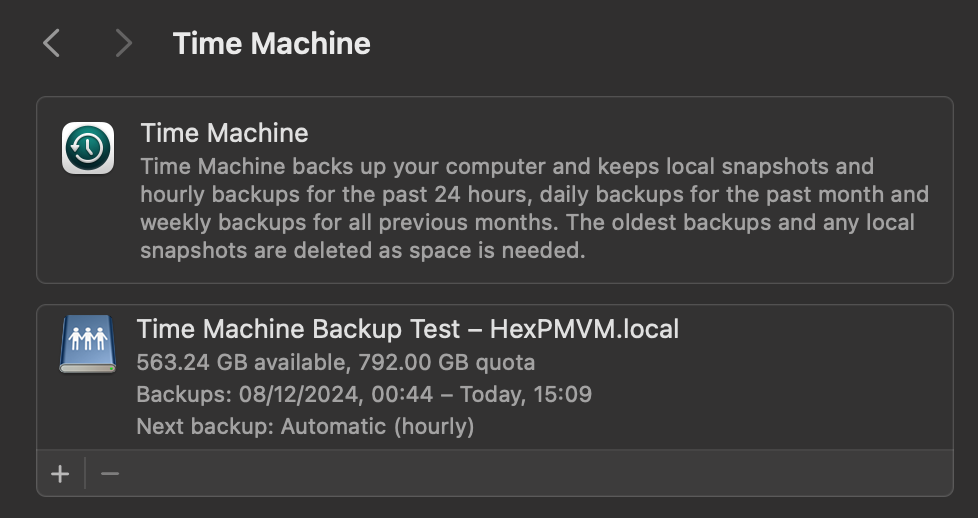

I know this is a request for a more 'One click' Hex TM integration, but incase people wanted to test this now, you can do this in Hex + TN today. (already had mine running a week without issue. TIME MACHINE INSTALL GUIDE Create a Folder & name it time machine (or a custom name) Set the Folder permissions (I left mine open, add user permissions here to restricted access) Navigate to the TrueNas UI (Server IP > Username: truenas_admin Pasword: server password from install) Navigate to the Shares tab, you should see your newly created share. Click on edit (pencil) On the Purpose drop down change to > Basic or multi user time machine. Press save/apply, and it'll prompt a restart of the SMB process. Go to your mac settings > general > Time machine. Click the + icon and locate your time machine share, then click setup disk. FYI if you aren't already connected to your Hex server, you'd need to do so now. Either search for the server in the network tab of finder OR connect to the server with finder > go > connect to server > SMB://[THE IP OF YOUR HEX SERVER] You can now choose to encrypt your backup with a password + if you choose, restrict the total disk usage the backup will have. You should now see your time machine backup setup. This will start automatically, but you can create a back up straight away if you choose. AUTO CONNECT SHARED DRIVE SETUP Now that could be it, but to ensure your Time machine backup will always occur, you need to ensure your Mac is always connected to your Hex server. to do this, we need to add the share to the login items Open Settings > general > Login items > click the + icon Locate your connected time machine share, then click open. You should now see the drive in the login items. That's it, you should be all setup and running.1 point

-

Hey, one of the creators of HexOS talked in an Q&A about migration from TrueNAS. (https://youtu.be/cTPVd4YCDZ8&t=1566)1 point

-

1 point

-

in the TrueNAS, you could juat import the existing pool, and your data still intach.. But as right now in beta, HexOS ignores all of existing pool and data and treat it as a new drives and wipes everything. Lets hope the developers acknowledge this and let us import the existing pool.0 points