-

Posts

32 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Blogs

Store

Everything posted by Duncan Innes

-

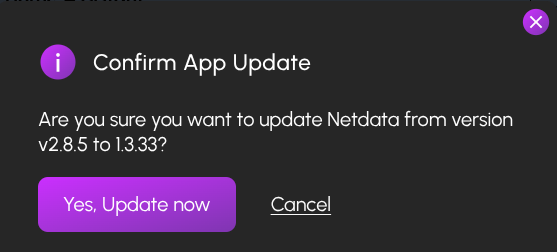

I have 4 apps, all require update today. All of them provide a similar message to this when clicking the "Update now" button. I'm sure the first number is the actual version of the App itself, while I think the second number is the version of the HexOS YAML for the app. Would be much nicer to display this detail in a manner which is easier to understand.

-

Notification: Updates are available for 1 application

Duncan Innes replied to Duncan Innes's question in OS & Features

I think the "experimental" tag will stay there until HexOS is fully released. Once the GA release comes out I would think the 'experimental' tag will drop from the fully supported curated apps. But that's a good point about update cadence. Would be nice to see if an app gets updates daily, weekly, monthly or rarely. Would help decide which apps might be most appropriate for me. And some method of automatically applying app updates would be nice. I don't need to be fiddling with the Apps page just to ensure all apps are updated. My phone allows me to apply updates automatically. My TV does too. HexOS should have this ability at some point. -

Notification: Updates are available for 1 application

Duncan Innes replied to Duncan Innes's question in OS & Features

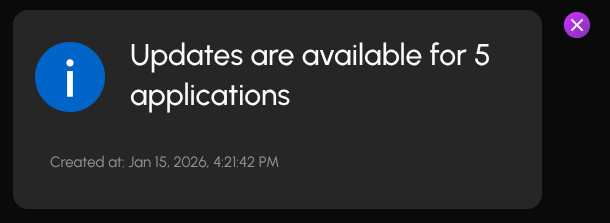

Today for the first time I know which apps require an update: (hint: I only have 5 apps installed 🤨) -

Notification: Updates are available for 1 application

Duncan Innes posted a question in OS & Features

When user clicks a notification for an App Update and the slide-in panel shows on the right of the page, it would make sense to have a link in the Notification to the App which requires an update. Today I have to navigate to the App listings and then into Installed Apps. But even *then* I don't have any indication which app requires an update. I have to click on each of them to find out which App requires an update. Am I missing something which indicates the app requiring update? -

App Cards - more information

Duncan Innes replied to Duncan Innes's topic in Roadmap & Feature Requests

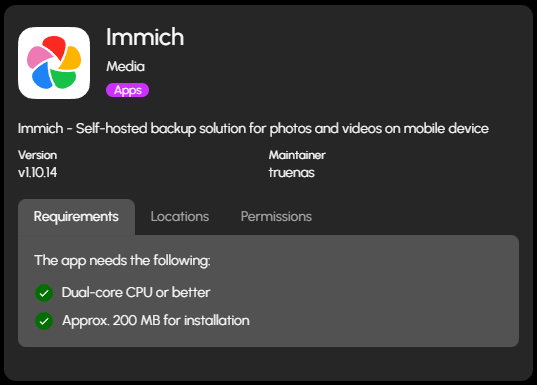

I've probably got more to unpack than is optimal for a single feature request, so let's start with the installed app cards having tabs. Like the card shown for the curated apps: But the tabs for an installed app would be, for example, Summary, Info, Details, Monitor, or something similar. This would allows for the main face of the card to remain untouched to the standard user, with the current information and options being displayed in the default "Summary" tab. The other tabs would then allow further information about the installed app. Perhaps similar details to the cards shown for an app in TrueNAS? I know we can view them there too, but isn't the dashboard supposed to keep us at arm's length from TrueNAS? Other examples would be performance stats of the app itself. How much is this app hitting the CPU? How much RAM is it taking? How much disk access is from this app? Another nice card would be some basic checks that the app is running. This might have to tie into the curation of an app. Curating some basic tests and checks to show the health of the app. Does it respond on port X? Is there a health check status within the app to display? Even a tab with some basic level logs from the app. I haven't investigated where apps are doing their logging yet (I would certainly prefer all my logs to hit a central log consolidator at some point), but a card showing any high level logs? Anyway - the future is open if the -

Would like to see some more details in the app card on the dashboard. Perhaps a tab approach like the curated app cards? But the dashboard would benefit from being able to display some more information about an app without having to delve into TrueNAS. The curated app card would also benefit from some suggested system requirements rather than always showing minimum requirements. I'm trying to get my head round how I can monitor a full-blown setup - something with all my storage on-board, and running upwards of a dozen apps. Would like to be able to monitor how much resource each of these apps is taking so that capacity planning is possible, or detecting when there is a badly configured app. For example, how can I easily see when a specific app might be hogging my CPU/RAM/IO even though I'm not using it? Indicators of bad behaviour, or a mis-configured app?

-

Just wondering how easy/hard it is too time share a GPU between various applications. If I have a single GPU, can it be shared between multiple application tasks. Like Immich ML, Plex transcoding, Jellyfin transcoding, an AI container, etc. I know that you can pass through a GPU to a virtual machine. But not sure if this same mechanism is in play for containers. I guess my ultimate question is if multiple GPUs are required to do justice to multiple workloads across multiple apps. Or do we need to move towards a generic GPU container which the various apps can call upon for their various workloads. Cheers

-

Personal opinion inbound: Yes to the jump from a single quad core CPU to two hex core CPUs. But you really need more RAM as well. I would definitely go for more RAM as HexOS requirements state 8Gb minimum and you only have half that. See what kind of cost various RAM upgrade options are. Aim for 8Gb minimum, but see what prices you can get for 16, 32 or higher kits. Registered if possible, as that gives the highest ceiling overall. As mentioned by @ubergeek - knowing which HBA is in the system is also key for your storage options.

-

Beelink Me Mini - https://www.bee-link.com/products/beelink-me-mini-n150 Room for 6 M.2 slots. Attach an external USB-C 4-bay HDD enclosure to fill your HDD needs.

-

Sorry - missed this bit out. It's a pre-made mini computer. My test rig is a BeeLink SER 7. It's similar to the BeeLink SER 8 just one generation back. It's ideal for my testing at the moment. Booting from a USB attached NVME drive in a USB enclosure, then using 2x 2Tb internal NVME drives for the storage pool.

-

I would agree with above. I am currently running on a rather speedy Ryzen 7 7840HS with 64Gb RAM. I am being frustrated at how difficult it is to get the iGPU or NPU used for anything. When I pull the trigger for a proper build, it will likely be an Intel CPU. i5 or i7 or whatever they're calling them. I would like to know what others think, especially about these problems in the 13/14th generation chips. Desktop or Mobile chips? Would be fascinating to see something like the BeeLink ME Mini with a more capable CPU in there. Lunar Lake perhaps? But that might be straying into the power territory where a custom NAS build is necessary?

-

Guidance/Advice For First Time DIY NAS Build With HexOS

Duncan Innes replied to TrOnzl3r's topic in Hardware

Yeah - I made a similar mistake with using a 1Tb M.2 boot drive. Totally wasted. For now. Don't know if there's a plan to be able to use it more, or make it more secure & tolerant of failure. But my next install will see the 1Tb boot drive swapped out for a 256Gb boot drive I have sitting doing nothing. Even that is overkill for now. My other two M.2 drives are SK hynix Gold P31 2TB PCIe NVMe Gen3 M.2 2280 for power efficiency. Can you do Link Aggregation with multiple 2.5G NICs? Worth a look. I'll certainly be hoping for LAGG when I get round to building a HexOS 1.x build (i.e. non-beta). -

Do you have the App Curation Templates documented anywhere? Was wondering if I could take a read of the documentation and try my hand at building a template?

-

Let's not be too mean spirited about HexOS. Cutting some slack at this point is, I think, an acceptable approach. I share the frustrations of many, but I also sympathise with the developers who have found themselves dealing with a much bigger community than expected. Being in OS Engineering myself, I totally sympathise with the desire to get things right before letting it out the door. Being in OS Engineering myself. I am personally frustrated that I cannot get the latest functionality in a fortnightly drop to help test out. It's a tricky balance. I have my fingers crossed for the Q2 update to arrive during Q2 with an expanded landscape for testing. My greatest issue with HexOS unfortunately cannot be resolved by the devs. I am now speccing an imaginary server to meet an ever expanding list of requirements 😏

-

I've done all the ElectricEel updates and not had any problems. Just don't go to Fangtooth - I think that's the advice?

-

It's not so much new gear for me, but I'm looking for anything which maximises M.2 slots. I have never bothered to have or build a NAS before now, but the power available at this point is pretty amazing, so going all-flash seems key for me. I used to track how filesystems were going with tiered storage, as I planned slow spinning rust tier and a modest amount of SSD for fast tier. But these days I just don't see the need for HDDs in there, so tiered storage no longer seems necessary. I do like the idea of the Jonsbo N5 with water cooling though 🙂

-

PLEASE make more frequent releases. Monthly preferably. And a roadmap which has more detail than "targeting 1.0 for this year" would be invaluable. Immich do a very simple timeline roadmap. I would personally read every technical detail you could care to write about HexOS, but a simple timeline roadmap would probably go a long way to satisfying most people. The illusion of progress is more satisfying than the illusion of no progress.

-

Different software streams

Duncan Innes replied to Duncan Innes's topic in Roadmap & Feature Requests

So I suppose this articulates what I'm getting at. I would rather have some idea of when updates are planned, than having to keep checking the forums to find out if there's been a new release. I need to rebuild my HexOS system at the moment and would possibly wait for a new ISO to install if I had any idea of timescales. -

It would be nice if users were allowed to select which development stream they want to be a part of. Stable will obviously not become a thing until the 1.0 release, but when this happens it would be nice if we could choose whether to sit on Stable, Testing, or Dev software streams. With obvious caveats for buyer beware if you are in Dev or Testing streams. The number of users at sign-up suggests there are a lot of tech-savvy people here. This community could be leveraged in the form of much wider testing than is currently possible. Would also be neat to have a few more Blog updates than at present. Perhaps monthly? It's not a great feeling having paid for something, then getting sparse updates on how things are progressing. The way Immich presents it's Roadmap is very nice while keeping everything simple.