All Activity

- Past hour

-

Nerull joined the community

-

Next post - does HexOS have a support probelm?

TheCamba replied to gingerling's topic in Show & Tell

What an entertaining read. Each post is full of ups and downs, drama and humor. Best of luck on your next adventure as it looks like you are diving into apps. Since you are sticking to the HexOS interface, you will only see Plex and Immich. Which one are you doing first? - Today

-

Moppadogfish joined the community

-

TheBlackStig joined the community

-

zu_kreativlos joined the community

-

Balkyn joined the community

-

Kilcal joined the community

-

If Hex OS is touted as the accessible NAS platform, I think prioritizing services with broad appeal would make the most sense in order to attract more...everyday users; not just existing homelab users. Getting infrastructure setup for the following would be incredibly helpful and would probably help a large number of users. Audiobookshelf - Audiobook and ebook management. Calibre - ebook management. Plant-It - Plant maintenance and care reminders. Home Assistant - home automation. mealie.io - Recipe management. Jellyfin - FOSS Plex alternative. Actual Budget - personal finance management. Romm - Retro title management. Steam Headless - (This one is a stretch) a headless Steam streaming server.

-

I've only seen this one mentioned once in this thread, so I'm repeating it as an additional vote: Rustdesk server.

-

jstebbings joined the community

-

Stocker joined the community

-

Hi Todd, In brief, yes and yes (I think...). I was using 2 SATA SSDs for 'quick' storage and 4 SSHDs (500GB HDDs with onboard 8GB flash, yeah they were a thing...) for bulk storage. This was stable enough but not very quick (when running VMs) and the power draw felt a little excessive. I have considered write-endurance but as I think that, as with anything, as long as you are aware of what you're building it for you can select suitable components. Take for example the offerings from WD, the WD Green 1TB offers claims an endurance rating of 80TBW, whereas the Blue version offers 600 and the Red boasts 2000. From what I can see this is on-par with SATA-SSDs (as they essentially use the same or similar flash technology) , and I wouldn't even compare it to HDDs as most of the newer ones I've seen use SMR which is not suitable to NAS applications no matter how the manufacturer tries to sell it... If you compare these based on TBW alone (as PCIe link width and speed are irrelevant in this case) then although the Red is double the price of it's less reliable counterparts, it's supposedly 25 times bore durable than the Green so 'good value' if the TBW written rating is your main consideration. In this version of my build I've used WD SN740 drives which have an advertised endurance of 200TBW. These drives came from new laptops that were being upgraded so didn't cost me much 😉 so ideal for this proof of concept / learning experience.

-

petervc joined the community

-

PeppermintButler joined the community

-

Thank you! I'll check it out 🙂

-

MikeU changed their profile photo

-

It does not break anything, myself and others have been using it this way for months

-

I am hoping we get 1.0 before black friday (i have no basis for this hope) but if that does happen, i can't imagine a black friday sale to bring hexos down from the full price of $300 to $100.

-

Given the how wildly successful the $99 promotional price was, I think a case could be made that another promo like that would make sense if It brought in similar numbers. Not the same necessarily, but enough to where the math made sense. EG, 4X as many licenses sold in given period would still be double net the income, even at 50% less revenue per license.

-

As an aside, is all your storage NVME? Is it generally accepted that that type of storage is stable enough for a NAS with all the writing and rewriting? Just curious.

-

And to be realistic, it sounds like there were a lot of "Black Friday" licenses purchased so unless they need a fast cash influx what would really be the incentive? This is a business after all. Not to mention, Black Friday is not far off if they feel the need to bring on more people. But I am thinking they have a lot to develop and test with us before that time. These are exciting times in the consumer NAS space.

- Yesterday

-

DomSmith started following lspcie - Evolution of a NAS build.

-

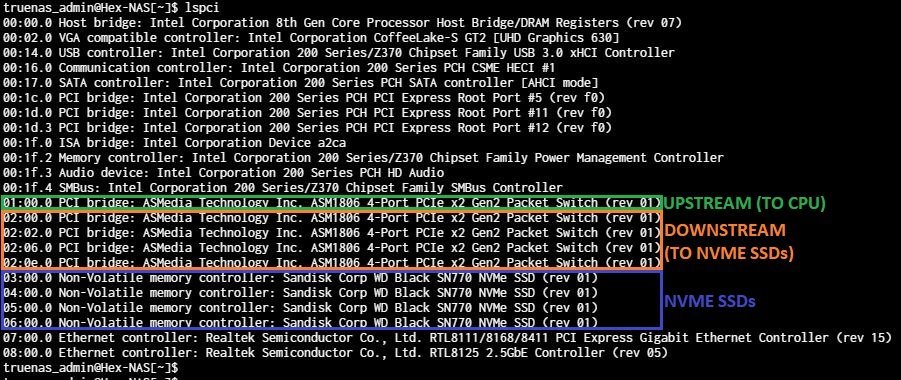

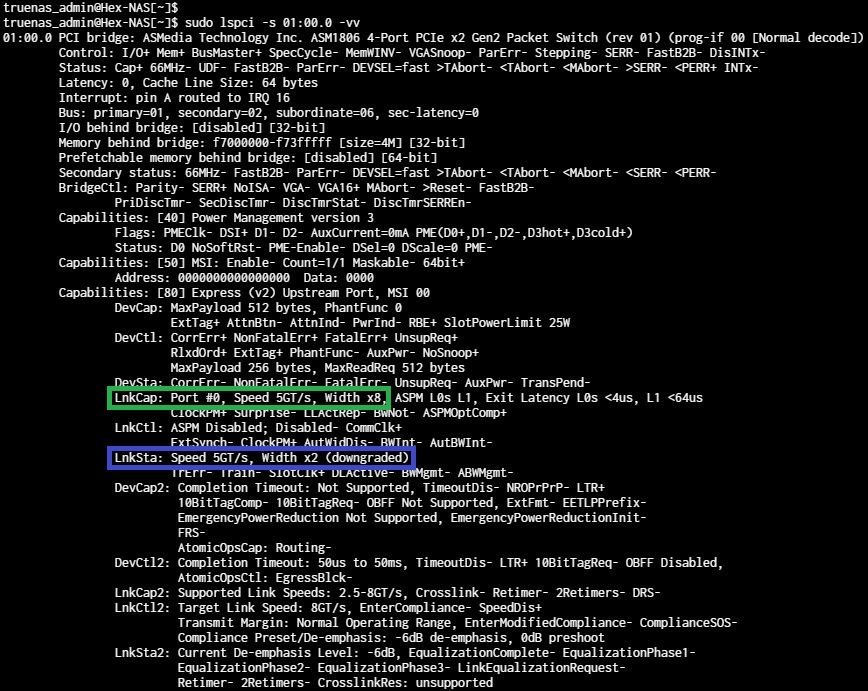

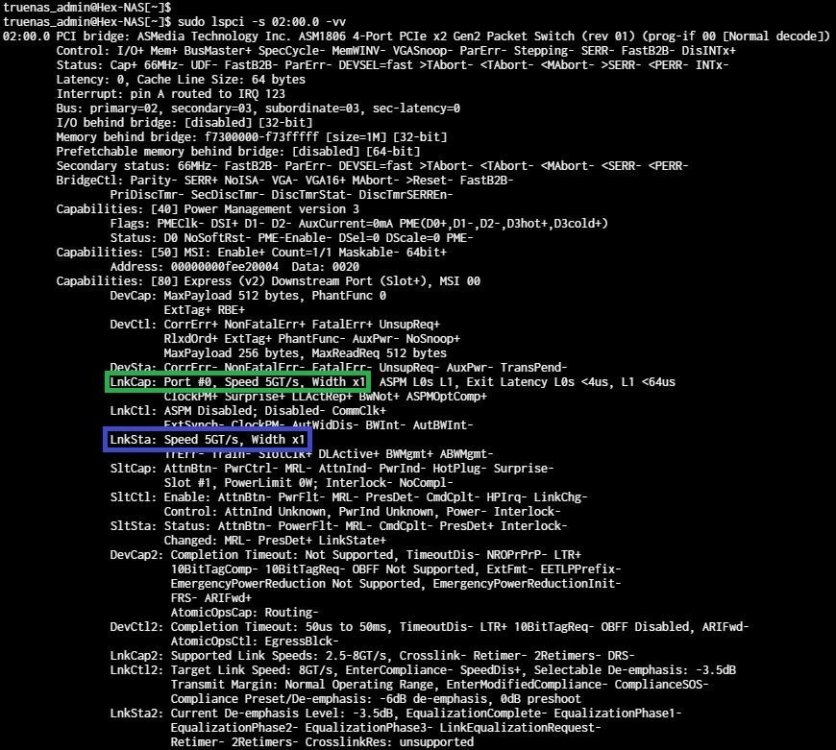

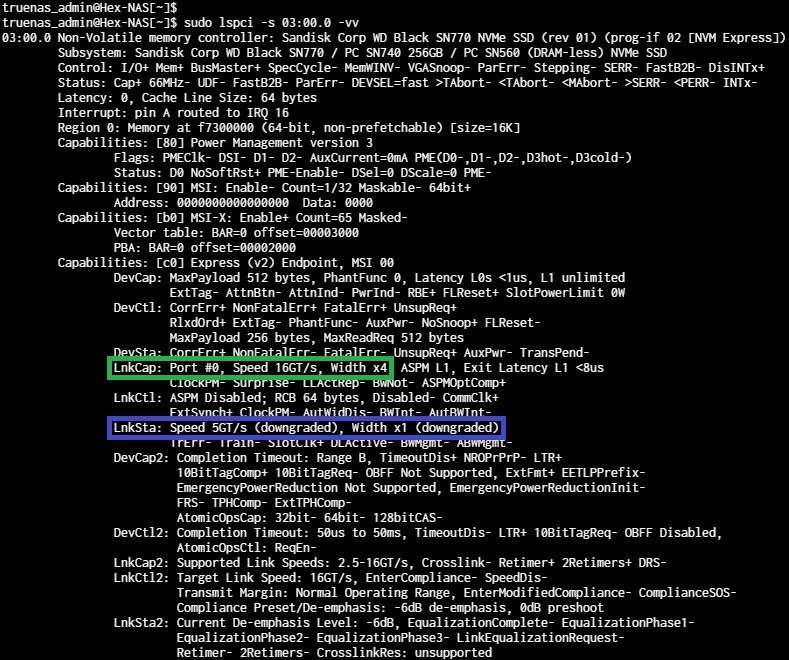

I just wanted to share a few things I've learned about the lspcie command as it's helped me understand how my NVME storage devices connect. The build is based on an Asus Prime H310T R2.0 Motherboard with an i5-8400, 32GB of DDR4-2666. and a pair of SATA drives for the OS. I currently use this as a working 'temporary backup' location, to host my Steam library, running PiHole and running a Windows VM (hosting legacy game servers!!!) The whole thing idles at 19W to 26W with just the PiHole and Windows VM in use. I've also added a 2.5 GbE NIC in the 'WiFi' slot which allows quick access to my Steam library, while the quite probably more reliable onboard 1 GbE port is used for management and other 'services'... The main storage comes from 4 x WD NVME drives mounted on the PCIe card (2 on each side). The PCIe card is a SU-EM5204(A2), and which a quick 'google' reveals a variety of conflicting information, so let's see what we can learn from the lspci command... The card appears to use an ASMedia ASM1806, which (according to the ASMedia website) is a PCIe Gen2 switch with 2 upstream and 4 downstream ports. The 'upstream' port reports that the link is capable (LnkCap) of 5GT/s (PCIe gen 2) over 8 lanes (weird?), however the link status (LnkSta) shows that is is connected over only 2, noting that the link width is 'downgraded'. This is to be expected as I can see from the physical interface that the card is only wired for an x2 connection, and I also know that the Motherboard also only presents 2 lanes to the NVME port (where the PCIe riser is connected). If I run the same command on one of the downstream ports, I can see that they are presenting and connecting (to the respective NVME SSDs) 1 PCIe V2 lane. Looking at one of the NVME SSDs, we can see that they are PCIe V4 (16GT/s) x4 (4 lanes), but are operating at PCIe V2 (5GT/s) x1 lane only. Putting all this together, I now understand that each drive connects to the PCIe switch at PCIe V2 x1, and the switch then connects to the CPU at PCIe V2 x2. This means that the 4 drives are sharing 10GT/s (less switching overheads) back to the CPU, and as 1 of the 4 drives is for redundancy / parity only 75% of that is usable data, so I'm probably getting about 7GT/s to the array. Next I'm going to look at how to actually test the internal performance, right after I've found a way of recovering my data from the HDDs you may have noticed (now disconnected) in the first pic...

-

shplig changed their profile photo

-

Got it working using this solution:

- Last week

-

@Iliyria does installing via TrueNAS break anything on the HexOS side? Haven't messed with Hex since December and can't remember if that's the case. If I recall correctly when installing Plex via TrueNas it will still show up in the Hexos dashboard etc? Going to be doing some NAS rebuilding this week and might make the jump to Hexos. I've got an A770, B580, and a 4070 I can use in the box. Would prefer to use the B580 if it works already, if not I'll probably use the A770 until the B580 is officially supported

-

There is nothing announced at this time. I wouldn't bet on it though since we are still at a discounted price right now

-

Is there going to be another sale before the full release, making it 100 dollars.

-

GL.inet Comet looks also interesting. Especially this specs: 2K@60FPS video with H.264 hardware encoding for smooth performance Ultra-low latency (30ms-60ms) for real-time remote control And almost the same pricepoint

-

I'm in the same boat @Matt11 running a dell r730xd, and currently doing the same thing with IPMI, just have a word document with the hex codes I need to input. I don't really see there being a day where HexOS really implements a fan control within the software. Thankfully since my server just runs 24/7 and my load remains mostly static as far as CPU usage, I don't have a huge problem with the set and forget fan speed via IPMI.

-

So far my Jet's have been flawless and simple installs. Be interested to see how they compare to the GL.inet Comet that'll come out soon.

-

Hello, I am getting the same issue... I have tried all of the fixes that I have seen online... does anyone have any other details to get this working? I am a total noob, so any help will be greatly appreciated. ty

-

I bought 2 but the TrueNAS server I just built has iDrac so I no longer need one of them. Im sure Ill find something to use it on... I might just waste it on my pfsense router

-

I thought the same. I also have a BliKVM V4, which cost me more then 200 euro’s. It does the job, but very unpolished compared to the JetKVM.

-

When I saw the price initially, I thought it was too good to be true, I would be just donating and never receive a product.... until the Youtubers started to receive the product. I feel that you get a pretty good value packed for your money. Mine shipped the other day, Im just waiting for FedEX/UPS to deliver it

-

Potential First NAS Server Build Questions and Concerns

Mobius replied to Rubiksgocraft's topic in Show & Tell

you're welcome glad i could help -

Potential First NAS Server Build Questions and Concerns

Rubiksgocraft replied to Rubiksgocraft's topic in Show & Tell

@Mobius thank you very much for your input. The drive cage thing makes a ton of sense and i didnt know about the cmr drives so i will do that. I will definitely look into upgrading the ram at some point if it all works out great. Thanks again :) -

Omegaus changed their profile photo

-

This tracks for me. I did not configure my pools during install and added pools later in TrueNas. Following the next post to go into TrueNas settings and configure a pool for apps worked.